Lenovo Unveils First AI Inferencing Server Compact Enough to Bring Enterprise-Level AI Anywhere

Lenovo, a global

technology powerhouse, redefines flexibility and efficiency for Artificial

Intelligence (AI) at the edge with the first-to-market, entry-level AI

inferencing server – designed to make edge AI accessible and affordable for

SMBs and enterprises alike1. Showcased as part of a full-stack of

cost-effective, sustainable, and scalable hybrid AI solutions at MWC25, the

Lenovo ThinkEdge SE100 bridges the gap between client and edge servers to

deliver enterprise-level intelligence for every business and is a critical link

to enabling AI capabilities for all environments.

Lenovo continues to

deliver on its mission of enabling smarter AI for all by making implementation

feasible for every business, in every industry with infrastructure that goes

beyond traditional data center architecture to bring AI to the data. Rather

than sending the data to the cloud to be processed, edge computing uses devices located at

the data source, reducing latency so businesses can make faster decisions and

solve challenges in real time.

By 2030, the edge

market is projected to grow at an annual rate of nearly 37%. Lenovo is leading

this moment with the industry’s broadest portfolio of edge computing infrastructure and over

a million edge systems shipped globally, achieving 13 consecutive quarters of

growth in edge revenue while expanding AI computing’s reach.

“Lenovo is

committed to bringing AI-powered innovation to everyone with continued

innovation that simplifies deployment and speeds the time to results,” said

Scott Tease, Vice President of Lenovo Infrastructure Solutions Group, Products.

“The Lenovo ThinkEdge SE100 is a high-performance, low-latency platform for

inferencing. Its compact and cost-effective design is easily tailored to

diverse business needs across a broad range of industries. This unique,

purpose-driven system adapts to any environment, seamlessly scaling from a base

device, to a GPU-optimized system that enables easy-to-deploy, low-cost

inferencing at the edge.”

AI

Inferencing Performance Designed for Life at the Edge

The new AI

inferencing server is 85% smaller2 and 100 percent powerful. Meeting

customers where they are, it redefines edge AI with powerful, scalable, and

versatile performance that accelerates ROI. Despite a three-fold spend

increase, recent global IDC research commissioned by Lenovo

reveals ROI remains the greatest AI adoption barrier. Adaptable for desktops,

wall-mounts, ceilings, and 1U racks, the SE100 is engineered to be uniquely

affordable, breaking price barriers with AI inferencing performance that

supports better business outcomes while lowering costs.

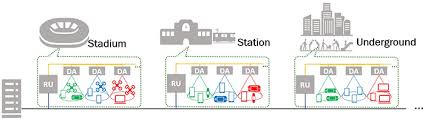

As the most

compact, AI-ready edge solution on the market3, the Lenovo ThinkEdge SE100 is perfect for

constrained spaces without compromising performance and answers customer demand

for accelerated AI-level compute that can go anywhere. The breakthrough server

delivers enterprise-level, whisper quiet performance with enhanced security

features in a design that is GPU-ready for AI workloads, like real-time

inferencing, video analytics and object detection across telco, retail,

industrial and manufacturing environments.

The ThinkEdge SE100

maximizes performance in an AI-ready server, evolving from a base device to an

industrial grade, GPU-optimized solution as the end user sees fit. The ThinkEdge

SE100 can be equipped with up to six or eight performance cores, ensuring the

device’s power is maximized in a smaller footprint.

Edge

AI Inferencing in Action

Across industries,

the inferencing server is designed for low latency and workload consolidation,

supporting hybrid cloud deployments and machine learning for AI tasks like

object detection and text recognition, all while maintaining data security. The

ThinkEdge SE100’s rugged design includes dust filtering and tamper protection,

to give peace of mind for utilization in real-world environments. Robust

security controls, including USB port disabling and disk encryption, serve to

protect sensitive data from outside threats.

“Together, Lenovo

and Scale Computing are delivering innovative, enterprise-grade solutions to

customers worldwide. Leveraging the ThinkEdge SE100, we are bringing together

Lenovo’s industry-leading hardware reliability with Scale Computing’s proven

edge software to create a purpose-built HCI solution for AI at the edge,” said

Jeff Ready, CEO and co-founder of Scale Computing. “With its compact,

power-efficient design and enterprise-class performance, the ThinkEdge SE100 is

redefining how businesses of all sizes deploy scalable, resilient

infrastructure in the most demanding environments. This latest innovation

reflects Lenovo’s leadership in edge-AI computing and our shared commitment to

bringing the future of intelligent, AI-powered infrastructure to businesses

now.”

Retailers can

leverage the innovation to power inventory and associate management or support

loss prevention at self-checkout. Manufacturers can leverage the SE100 to power

quality assurance capabilities and warehouse monitoring. Healthcare

professionals can equip remote offices with a ThinkEdge SE100 server to power

process automation, handling lab data and ensuring back-office efficiency.

Finally, the ThinkEdge SE100 can be used by energy companies in gas stations

and refineries, helping with power logistics and smart meters.

Enabling

New Levels of Efficiency and Scalability

Lenovo’s Open Cloud

Automation (LOC-A) and a Baseboard Management Controller (BMC) built into the

device eliminate traditional deployment difficulties for seamless

installations. Deployment costs are reduced by up to 47%, and users experience

both resource and time savings of up to 60%4. After deployment, the ThinkEdge SE100

also provides ease of management through Lenovo’s latest version of XClarity,

which centralizes monitoring, configuration and troubleshooting infrastructure

in a single pane of glass.

Lenovo ThinkEdge

SE100 supports robust AI performance without compromising energy efficiency to

optimize total cost of ownership and support sustainability commitments. The

SE100’s system power is designed to stay under 140W even in its fullest

GPU-equipped configuration, supporting lower power consumption. Additionally,

Lenovo’s energy-saving implementations reduce overall carbon emissions of the

ThinkEdge SE100 by up to 84%5. This starts before the device is even

turned on, thanks to a 90% EPE packaging design that cuts its transportation

footprint. Design elements, like a new low-temperature solder and post-consumer

recycled content along with Lenovo’s Product End-Of-Life (PELM) program, also

support a transition to a circular economy.

Across its

full-stack hybrid AI portfolio, Lenovo delivers powerful, adaptable, and

responsible AI solutions to transform industries and empower individuals with

innovations that enable Smarter AI for all. The SE100 is part of the new ThinkSystem V4 Servers Powered by Intel, which

also extend Lenovo Neptune liquid cooling from the CPU to the memory with the

new Neptune Core Compute Complex Module. The module supports faster

workloads with reduced fan speeds, quieter operation and lower power

consumption. It is specifically engineered to reduce air flow requirements,

yielding lower fan speeds and power consumption while also keeping the parts

cooler for improved system health and lifespan.

Leave A Comment