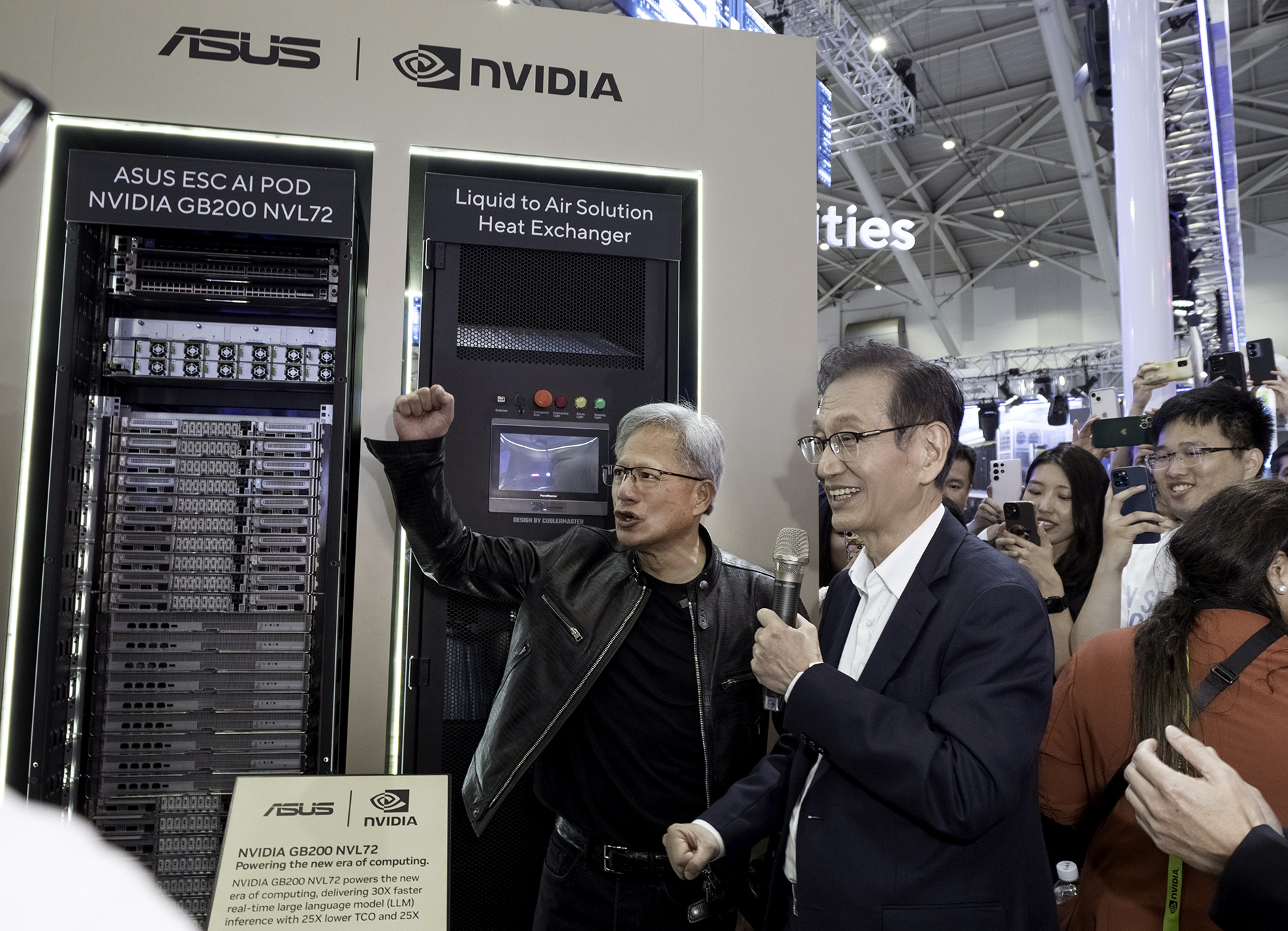

ASUS Presents ESC AI POD With NVIDIA GB200 NVL72 at Computex 2024

ASUS announced its latest

exhibition at Computex 2024, showcasing a comprehensive range of AI servers for

applications from generative AI to innovative storage solutions. Highlighting

this event is the pinnacle of ASUS AI solutions - the all-new ASUS ESC

AI POD with the NVIDIA® GB200 NVL72 system. Also on display are the latest ASUS NVIDIA

MGX-powered systems, including ESC NM1-E1, ESC NM2-E1 equipped with NVIDIA GB200 NVL2 and ESR1-511N-M1

with NVIDIA GH200 Grace Hopper Superchips, and the latest ASUS solutions

embedded with the NVIDIA HGX H200 and Blackwell GPUs.

ASUS is committed to

delivering end-to-end solutions and software services, spanning hybrid servers

to edge-computing deployments. Through this showcase, ASUS demonstrates its

expertise in server infrastructure, storage, data center architecture and

secure software platforms, aiming to drive widespread AI adoption across

diverse industries.

Introducing ASUS ESC AI POD (ESC NM2N721-E1 with NVIDIA GB200 NVL72)

Extensive ASUS expertise in crafting AI servers with

unparalleled performance and efficiency has been bolstered by its collaboration

with NVIDIA. One of the highlights of the showcase is the NVIDIA

Blackwell-powered scale-up larger-form-factor system - ESC

AI POD with NVIDIA GB200 NVL72. The full rack solution is a symphony of GPUs,

CPUs and switches harmonizing in lightning-fast, direct communication,

turbocharging trillion-parameter LLM training and real-time inference. It's

equipped with the latest NVIDIA GB200 Grace Blackwell Superchip and

fifth-generation NVIDIA NVLink technology, and supports both liquid-to-air and

liquid-to-liquid cooling solutions to unleash optimal AI computing performance.

Paul Ju, Corporate Vice President for ASUS and Co-Head of

Open Platform BG, highlighted, "Our partnership with NVIDIA, a global

leader in AI computing, underpins our expertise. Together, we've harnessed a

potent synergy, enabling us to craft AI servers with unparalleled performance

and efficiency."

"ASUS has strong expertise in server infrastructure and

data center architecture, and through our work together, we're able to deliver

cutting-edge accelerated computing systems to customers," said Kaustubh Sanghani, vice president of GPU

product management at NVIDIA. "At Computex, ASUS will have on display

a wide range of servers powered by NVIDIA's AI platform that are able to handle

the unique demands of enterprises."

Elevating AI success with ASUS MGX scalable solutions

To fuel the rise of generative AI applications, ASUS has

presented a full lineup of servers based on the NVIDIA MGX architecture. These

include the 2U ESC NM1-E1 and ESC NM2-E1 with NVIDIA GB200 NVL2 servers, and the

1U ESR1-511N-M1. Harnessing the power of the NVIDIA GH200 Grace Hopper

Superchip, the ASUS NVIDIA MGX-powered offering is designed to cater to

large-scale AI and HPC applications by facilitating seamless and rapid data

transfers, deep-learning (DL) training and inference, data analytics and

high-performance computing.

Tailored ASUS HGX solution to meet HPC demands

Designed for HPC and AI, the latest HGX servers from ASUS

include ESC N8, powered by 5th Gen Intel® Xeon® Scalable processors and NVIDIA Blackwell Tensor Core

GPUs, as well as ESC N8A, powered by AMD EPYC™ 9004 and NVIDIA Blackwell GPUs. These benefit from an

enhanced thermal solution to ensure optimal performance and lower PUE.

Engineered for AI and data-science advancements, these powerful NVIDIA HGX

servers offer a unique one-GPU-to-one-NIC configuration for maximal throughput

in compute-heavy tasks.

Software-defined data center solutions

Outshining competitors, ASUS is specialized in crafting

tailored data center solutions - going beyond the ordinary to provide top-notch

hardware and delivering comprehensive software solutions tailored to enterprise

needs. Our services cover everything from system verification to remote

deployment, ensuring smooth operations essential for accelerating AI

development. This includes a unified package comprising a web portal,

scheduler, resource allocations, and service operations.

Moreover, ASUS AI server solutions, with integrated NVIDIA

BlueField-3 SuperNICs and DPUs, support the NVIDIA

Spectrum-X Ethernet networking platform to deliver best-of-breed networking

capabilities for generative AI infrastructures. In addition to optimizing the

enterprise generative AI inference process, NVIDIA NIM inference microservices, part of the NVIDIA AI Enterprise

software platform for generative AI, are capable of speeding

up the runtime of generative AI and simplifying the development process for

generative AI applications. ASUS also offers customized generative AI solutions

to cloud or enterprise customers, through its collaboration with independent

software vendors (ISVs) - such as APMIC, which specialize in large language

models and enterprise applications.

At ASUS, the company's expertise lies in striking the perfect

balance between hardware and software, empowering customers to expedite their

research and innovation endeavors. As ASUS continues to advance in the server

domain, its data center solutions, coupled with ASUS AI Foundry Services, have

found deployment in critical sites globally.

Leave A Comment