Cisco Unveils Deep Buffer Router to Interconnect AI Data Centres

The AI factory buildout is exposing

all kinds of bottlenecks, including in the WANs that connect far-flung data

centers. Cisco is addressing this market with a new router, dubbed the Cisco

8223, which it says is the industry’s first fixed 51.2Tb router supporting deep

buffer routing that allow hyperscalers to lash their AI factory networks

together, or a data center interconnect.

We’re in the midst of a

massive effort by hyperscalers to build as many huge data centers to house

racks of GPUs for AI training and inference, as fast as they can. OpenAI is

party to the Project Stargate AI alliance, along with Oracle and

SoftBank, which has committed to building up to 10 gigawatts worth of data

centers (equal to the electricity needed to power all the GPUs and systems at

full build-out), at a cost of $500 billion. The group is currently building its

first data center in Abilene, Texas, dubbed Stargate I, which will consume 5

gigawatts of energy.

Not to be

outdone, Facebook parent Meta is

investing in giant data centers of its own. The first one, dubbed Prometheus,

is a 1-gigawatt campus in Columbus, Ohio, which is currently being built and is

due to come online in 2026. It will follow that up with a much larger data

center, dubbed Hyperion, that will consume 5 gigawatts of energy when it’s

completed in Richland Parish, Louisiana.

Nvidia will be called on to provide much of the computing

horsepower in these data centers with its current Blackwell line of GPUs. But

there will be a mix of other AI accelerators, including AMD’s

forthcoming MI450 GPU, which OpenAI committed to buying in great numbers this week in a deal

that will see OpenAI buying 6 gigawatts of AMD GPUs in exchange for a 10%

equity stake in the company. Meta, also a big Nvidia customer, also recently

nabbed Rivos, which was developing a promising new AI accelerator based on RISC-V technology

and was reportedly worth $2 billion.

There will be lots of big

and fast storage purchased to go along with these massive installations of GPU

and AI accelerators, as we’ll be writing about in the weeks to come (stay

tuned). And of course the network gear providers will also have something to

crow about, as Meta, OpenAI, and others invest massive sums in copper wiring,

and probably a bit of cooler and more energy efficient co-packaged optics

(CPO), to string all these GPUs, CPUs, and storage devices into a coherent

whole. And let’s not forget the massive amounts of passive and active cooling

that will be needed to keep all this silicon, copper, and glass from melting

down–let along the gigawatts worth of power to make it all hum.

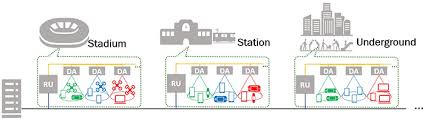

With all this exciting AI

infrastructure going into place, it’s easy to lose sight of the need for

external networking. These data centres are being built in some fairly remote

locations, where land and electricity is cheaper, so the hyperscalers will need

some pretty big network pipes, or data centre interconnects (DCIs), to connect

them to their other massive data centres for AI training and inference

workloads.

Leave A Comment