Balancing Cost and Resilience: Crafting a Lean IT Business Continuity Strategy

In the ever-evolving digital

landscape, businesses face increasing challenges in ensuring the safety and

continuity of their data. A string of disruptions experienced by major corporations has only heightened the

need for robust backup and recovery mechanisms. At the heart of modern

businesses lies data, and its security and risk management play a pivotal role

in ensuring business continuity.

However, while the importance of

backups and disaster recovery plans is universally acknowledged, executing them

can become prohibitively expensive. This financial challenge underscores the

necessity of prioritization and the ability to architect a lean yet resilient

IT infrastructure.

A clear

checklist is required

While the causes, impacts, and

solutions of data-related incidents may vary, the overarching principles remain

consistent. Your organization likely already has some backups to counter

ransomware or equipment failure. So answer this: What recovery point objectives

(RPOs) and recovery time objectives (RTO) can you achieve with your current

backup plan if your production servers or cloud instances suddenly vanish? Put

another way, how much money will that downtime cost the business if you need to

perform a complete disaster recovery process?

If that makes you feel uneasy, and

you're in a position that should know this, it might be time to review your

backup and disaster recovery (DR) plans.

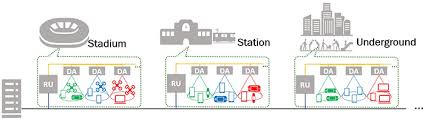

Starting with the fundamentals,

businesses need to map out and identify which systems are responsible for which

real-world "work". While some companies may use siloed infrastructure

per department, there are likely countless dependencies that need to be mapped

out. For example, it's obvious that a directory server disruption will knock

out authenticating with any services or endpoint (which is huge but expected),

but what about your internal ERP system?

If you don't already have a map of

your IT infrastructure, get it done. Ensure that system dependencies are

clearly documented and well understood. Next, list the primary real-world

processes based on your business (e.g. product manufacturing, e-commerce,

logistics) and most importantly, stack-rank them based on their financial

impact if disruptions happen.

Each business will have vastly

different requirements based on its structure and technology stack. However,

there will always be a cost that can be associated with downtime. This process

needs to be routinely reviewed and kept up to date.

Building

solid foundations

Building a dependable and resilient

IT infrastructure isn't easy, but it's also not difficult once we break it down

into multiple components.

· High

availability (HA) for production environments: In the event of a server failure, the HA system

should automatically take over, minimizing downtime. For companies that

self-host their systems, this is usually done through HA hypervisor clusters

paired with similarly HA-clustered storage systems. Cloud deployments can

likewise leverage load balancers and self-monitoring tools to ensure services

remain online.

· On-site

and off-site backups:

Regular backup schedules for critical operational tools like file servers, DBs,

ERP systems, core service virtual machines, and offline servers should be

documented. Depending on the importance of the operational service, appropriate

Recovery Time Objectives (RTO) and Recovery Point Objectives (RPO) need to be

carefully defined. An out-of-date database backup is better than nothing but

will still cause a significant headache. Finally, off-site backups and disaster

recovery (DR) capacity shouldn't be an afterthought. To keep costs in check,

retention policies and the scale of the DR equipment or cloud instances can be

lowered.

Restoring

shouldn't be stressful

In the unfortunate event of a

disruption, a three-tier restoration process can ensure business continuity:

1. Automatic failover: HA clusters should be designed to

automatically failover, ensuring that there's no manual intervention required

during critical moments. This should be enough to take care of simple equipment

failures.

2. Restore from snapshots or failover to

backup systems: Local

snapshots and similar technology allow servers to quickly roll back an

unintended change extremely quickly. If the problem persists or the problem

stems from a larger issue (e.g. the entire cluster is down), full restores or

failovers to another system should be considered.

3. Restore from remote backups or

failover to the DR site: In

case of major disruptions like natural disasters, remote backup solutions come

into play. Businesses can restore from these backups or, if necessary, failover

to a DR site to resume operations.

Stay

ahead of the curve

Effective monitoring is the backbone

of a resilient infrastructure. The approach should focus on:

· Filtering

out the noise:

Monitoring solutions need to ensure that only critical notifications are sent

out, preventing information overload and ensuring that the right people are

alerted promptly when critical events inevitably happen.

· Acting

quickly and decisively: Time

is of the essence during disruptions. IT, DevOps, SIRT, and even PR teams need

to be well coordinated for various types of events. From security breaches to

data center fires or even just mundane equipment failures, anything that might

result in customer or operation disruptions will involve cross-team

communications and collaboration. The only way to get better at handling these

is to have documentation on what should be done, a clear chain of command, and

practice drills.

In conclusion, a comprehensive backup

and recovery strategy is essential for businesses aiming for uninterrupted

operations. While there are many solutions available in the market, it's

crucial to find one that aligns with your business needs. Over the years,

companies like Synology have demonstrated expertise in storage and data

protection, with numerous success stories that attest to their capabilities.

(By Joanne Weng, Director of the International Business Department,

Synology)

Leave A Comment